4 Frequencies and Zipf’s Law

4.1 Exploration

Today’s reading was an example of an exploratory study. In contrast to the other papers we have read, an exploratory study is usually qualitative rather than quantitative. That means there is a rather broad research question with many categories and a rather basic statistical analysis. The typical quantitative study tends to be focused on only a few categories but goes much more in depth on the analysis. The main aim is usually hypothesis testing. In exploratory and qualitative studies deriving hypotheses is often the end result. Both formats go hand in hand and are equally important to the research process.

4.2 Frequency and scales

Since the linguistics and methodology this week was rather straightforward, let’s take a little detour and think about frequency, our most important measure.

Most of the data we have encountered was count data. Even though a count is one of the simplest measures possible, there are many ways you will encounter them.

- Absolute frequency

- Basic measure

- Should always be reported since everything else is based on it

- Sometimes hard to visualize

- Hard to interpret across different sample or category sizes

- Relative frequency

- Absolute frequency divided by all occurrences

- Either between 0 and 1 or 0% and 100%

- Makes it possible to compare between different sized samples or sub-categories

- extremely low relative frequency is sometimes reported as normalized frequency, e.g. 1 per Million == 0.000001 == 0.0001%

- Log scale

- Most commonly base 10, i.e. 1 to 10 is the same distance as 10 to 100, 100 to 1,000, etc.

- Uses:

- Visualize heavily skewed data

- Make exponential data linear (e.g. word counts)

- Approximate human perception of quantities

Log scale presentation of frequencies is common with count data for two reasons. Firstly, most words/phrases/structures have few types that are extremely frequent, and many types that are extremely infrequent (Zipf’s law). This makes visualization or generally reasoning about quantity differences difficult.

4.3 Collocation

Major Concepts

- Collocation and Co-occurrence

- Frequency and relative frequency

- Probabilistic properties of language

4.4 Quantities in corpora

In some sense Corpus Linguistics is really simple since it is largely focused on one basic indicator: frequency of occurrence. All annotations, such as lemma, pos, text type, etc. are ultimately measured in counts. There are hardly any other types of indicators, such as temperature, color, velocity, or volume. Even word and sentence lengths, are fundamentally also counts (of characters/phonemes, or tokens/words).

This simplicity, however, comes at a cost. Namely the interpretation is rarely straightforward. We’ve talked about how ratios become important if you are working with differently sized samples. I use sample here very broadly. You can think of different corpora as different samples, but even within the same corpus, it depends on what you look at. Let’s assume we were investigating the use of the amplifier adverb utterly. As a first exploration, we might be interested whether there are some obvious differences between British and American usage. For the British corpus, let’s take the BNC (The BNC Consortium 2007), and for the American corpus the COCA (Davies 2008).

> cqp

BNC; utterly = "utterly" %c

COCA-S; utterly = "utterly" %c

size BNC:utterly; size COCA-S:utterly

...

1204

4300The American sample returns more matches. Does that mean it is more common? No! The American sample is also about 5 times larger. We need to account for the different sizes by normalizing the counts. The simplest way to achieve that is by calculating a simple ratio, or relative frequency.

- \(\frac{1248}{112156361} = 0.0000111\)

- \(\frac{4401}{542341719} = 0.0000081\)

Now we can see that utterly actually scores higher in the British sample. We can make those small numbers a bit prettier by turning them into occurrences per hundred a.k.a percentages, which gives us 0.00107% and 0.00079%. That’s still not intuitive so let’s move the decimal point all the way into intuitive territory by taking it per million. utterly occurs 11.1 times per million words in the British sample and 8.1 times in the American sample.

Now how about the nouns utterly co-occurs with. To keep it simple, let’s focus on the British data.

BNC

count utterly by word on match[1]

...

34 and [#91-#124]

28 . [#20-#47]

27 different [#408-#434]

20 , [#0-#19]

13 at [#142-#154]

13 ridiculous [#929-#941]

11 impossible [#645-#655]

11 without [#1207-#1217]

10 miserable [#772-#781]

10 opposed [#820-#829]The two most frequently co-occurring adjectives are different and ridiculous. However, different is more than twice as frequent. Does that mean it is more strongly associated? No! The adjective different is itself also much more frequent than ridiculous (~47500 vs 1777).

4.5 Perception of quantities

Relative frequency and other ratios are not only interesting when you need to adjust for different sized samples. Human perception is also based on ratios, not absolute values. In some sense our brains also treat experiences on a sample-wise basis. Of course, you would not normally speak of experience as coming in samples, but remember the ideas of contiguity and similarity. We know that some of the structure in memory, therefore, structure in language, comes from spatially and temporally contiguous experiences, and/or the degree of similarity to earlier experiences. But of course we don’t remember every word and every phrase in the same way. Repetition and exposure to a specific structure play a key role. Now that we have a little experience with assessing what is relatively frequent in linguistic data, we should start thinking about what is frequent in our perception. An observation that has repeatedly been made in experiments is that absolute differences in the frequency of stimuli become exponentially less informative. This is captured by the Weber-Fechner law.

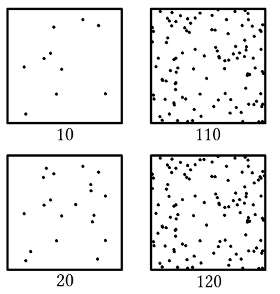

The observation is that the difference between 10 and 20 items is perceived as much larger than the difference between 110 and 120 items, even though they are the same in absolute terms. The higher the frequency of items, e.g. words, the higher the difference to another set has to be in order to be perceivable. This is strongly connected to the exponential properties of Zipf’s Law, which we will talk about in Week 7 and 8.

4.6 Collocation as probabilistic phenomenon

Collocations are co-occurrences that are perceived and memorized as connected. Some collocations are stronger, some weaker, and some are so strong that one or all elements only occur together, making it an idiom or fixed expression.

- spoils of war: idiom

- declare a war: strong collocation

- fight a war: weak collocation

- describe a war: not a collocation

- have a war: unlikely combination

- the a war: ungrammatical

The above examples are ordered according to the probability that they can be observed. We will get into the details of how we can quantify this exactly in the lecture and in future seminar classes. If you are impatient, www.collocations.de has a very detailed guide to how that works. For now, the logic is simply that we consider not only the different frequencies of the individual phrases, as in the examples above, but also the frequencies of the each word in the phrases, the words in the corpus, and all attested combinations of [] a war.

The important takeaway is that a defining property of collocation is gradience. That means that it is a matter of degree. The more frequent, a collocation is, relatively speaking, the more likely it is to be memorized as a unit. It is not a question of either or, or black and white. This idea has since been described for most if not all linguistic phenomena, including grammatical constructions, compound nouns, and even word classes, just to name a few. ## Lemma

This week are were working towards enriching our text data with information. We might be more interested in lexical units rather than word forms. The concept of lemma is commonly used to approach this. Remember, lemma usually has a technical definition that is used to gain information about lexemes the same way as we look at tokens to gain information about words. But they are not the same.

A lemma, similar to a lexeme, groups certain forms of a word. What are all the grammatical forms of be, cut, tree, nice, beautiful?

- be, am, are, is, were, was, been, ’s, ’m, ’re, ?being

- cut, cuts, (cut, cut), ?cutting

- tree, trees, tree’s, trees’

- nice, nicer, nicest

- beautiful

A lemma comprises all the inflectional forms of a word. This includes forms with grammatical affixes (tree, trees) and suppletive forms (go, went). What is not included is derivational suffixes like the adjectival -ly. Of course, this requires a clear definition of inflection and derivation. For example, there might be disagreement among researchers about whether participial -ing is derivational or inflectional. There is also the issue of whether the past participle of some verbs like cut is to be seen as separate “form” or not.

When it comes to the technical side of research, you have to be aware of the decisions taken during lemmatization of corpus data as to what counts and what doesn’t. The operational definition of lemma might not match the relevant definition of lexeme as a linguistic concept.

4.6.1 Distribution

Information about the frequency of a word or its forms can already be very informative. We can extend this idea easily and look at the larger units a word or lemma occurs in. Frequency information about the distribution is much more complex, but is based on the same underlying concepts and measured with the same tools.

As we will see in the up-coming reading Justeson & Katz (1991), the distribution of adjective pairs plays a crucial role in the formation of antonym pairs. There, the deciding factor is whether they occur together in the same context — different form same context. We could flip this around and look at words with same form that occur in wildly different contexts. A special case of this is homonymy.

How can we find out if something is a homonym if we do not know the meaning or want to keep intuition out of the picture?

Animal or sport utensil?

- Maybe I’m a fruitarian bat

- … with a straighter bat than some of the Englishmen

- The unfortunate starved bat was then returned

- And not simply a bat, but an autographed bat

(examples from The BNC Consortium 2007)

In this example, the grammatical structure is similar. We find attributive adjectives preceding bat which is typical for nouns. However, the meaning of the adjectives provides enough context to disambiguate the two uses of bat. The lexicon is structured by both grammar and meaning. If you expanded this to more co-occurrence patterns, e.g. with verbs or even different text types, two clearly distinct patterns emerge. The animal bat eats, which is similar to other animals, whereas the utensil bat strikes like other club-like devices. A Giraffe rarely strikes and a tennis racket doesn’t eat. They each are parts of distinct lexical fields. Distribution plays a defining role in the structure of those fields, therefore, our lexicon.

4.6.2 Association

A key component of human memory is association. The lexicon is organized in associative networks. What we perceive together frequently, we associate as belonging together. This is also referred to as spatial and temporal contiguity.

- law and …?

- order

- good or …?

- bad, evil

- the number of the …?

- ??beast

- spoils of …?

- ??war

The first word that comes to mind when you read the first two fragments is most likely law and order, and good or bad. For the other two examples, there is expected to be more variation. A metal fan might readily come up with beast, since the song of the same name is part of their cultural experience, and therefore, very frequent for them. spoils of war might not be a phrase that everyone is familiar with at all. spoils as a word is very rare; yet there is a strong association with the phrase. If it is encountered, it occurs together with war more often than not.

4.7 Homework

At the institute, we have a host of corpora that are readily available for our students. We interact with these data sets via a program called Corpus Work Bench (Evert & Hardie 2011). In order to interact with our Corpus Lab at the institute, you need to do some setup. The previous blog posts here are full of examples, and there’s also plenty of examples to be found in the tutorials in links section. Go through the steps below and the links on the Wiki and try to run a few queries.

- Step 1: Set up login

- Step 2: Set up CWB

- Step 3: Recap Corpus Structure

- Step 4: Download and make yourself familiar with the tutorials

- Step 5: Run your first query

There is also some older YouTube tutorials, that might be helpful. However, do not follow any setup tutorials on YouTube.

4.8 Tip of the Day

Today: Multitasking

Learning an academic discipline takes a lot of time and focus. However, some aspects are like learning a language or motor skills. It might sound weird, but knowledge, especially theoretical, is like a muscle you can train. So here is my suggestion for how to get better at Linguistics or Literary Studies or whatever subject you are interested in: Listen to lectures, talks, podcasts and other content in the background.

Great topics to passively consume are:

- Repeating or recapping theory, e.g. Cognitive Linguistics

- Philosophy of Science, highly interesting, vastly important, but oft neglected

- Sciences that are not your major

Here are some activities I frequently use to bombard myself with knowledge.

- weight or endurance training

- practicing an instrument (especially repetitive technical exercises)

- cooking

- cleaning, tidying, building Ikea tables ;)

Non of these activities require your full mental focus or have long pauses, so your thoughts are free to meander through the depths of science. Nowadays, a lot of talks or even full lectures can be found online, and with online teaching taking off, there will be ever more.

Linguistics

Luckily, we are not the only university to have taught linguistics online. Here are some nice channels to binge watch both actively and passively.

- Martin Hilpert: Has a variety of lectures and full courses on all things linguistics.

- The Virtual Linguistics Campus: Old but gold.

- People without YouTube channels, but who are great lecturers, Adele Goldberg, Joan Bybee, George Lakoff, Geoffry Pullum. I have found many of their lectures and interviews online on various channels and platforms.

- NativLang: Probably my favorite language channel. Animation videos on a variety of language related topics. Focus on Cross-Linguistics.

Other sciences

If you are a curious person, and if you appreciate the academic endeavor, chances are you are interested in other sciences, too. Knowing subjects outside the social sciences may help you in unexpected ways. Here are my go-to channels to listen to in the background.

- mailab: Focus on (bio-)chemistry, but mostly deals with current debates on the media. You can learn a lot about how news outlets interpret and sometimes misrepresent scientific studies.

- Closer to the Truth: Philosophy. Dealing with the big questions. How do we know facts? Why should we trust in Science? What are hypotheses and theories and why bother?

- Statquest: Pleasantly cringey statistics videos.

- zedstatistics: More in depth. (Less cringe. :( )

- PBS Space Time: Astrophysics. Popular science without the usual dumbing down. Great stuff to listen to even if you understand nothing. :D

- 3Blue1Brown: Mathematical concepts with animations instead of formulae. I was horrible at maths in school but I always had a sense that it is actually a very beautiful subject. Wish I had visualizations like these back then.

- Computerphile: Various computer science topics

I have not yet explored the world of audio books and audio podcasts, but I’m sure there is a lot of great stuff out there.

If you discover anything, let me know! :)